Teedra Harvey wanted a good grade for the final paper in her first-year English class. The way to get that, she thought, was a paper that sounded “scholarly.”

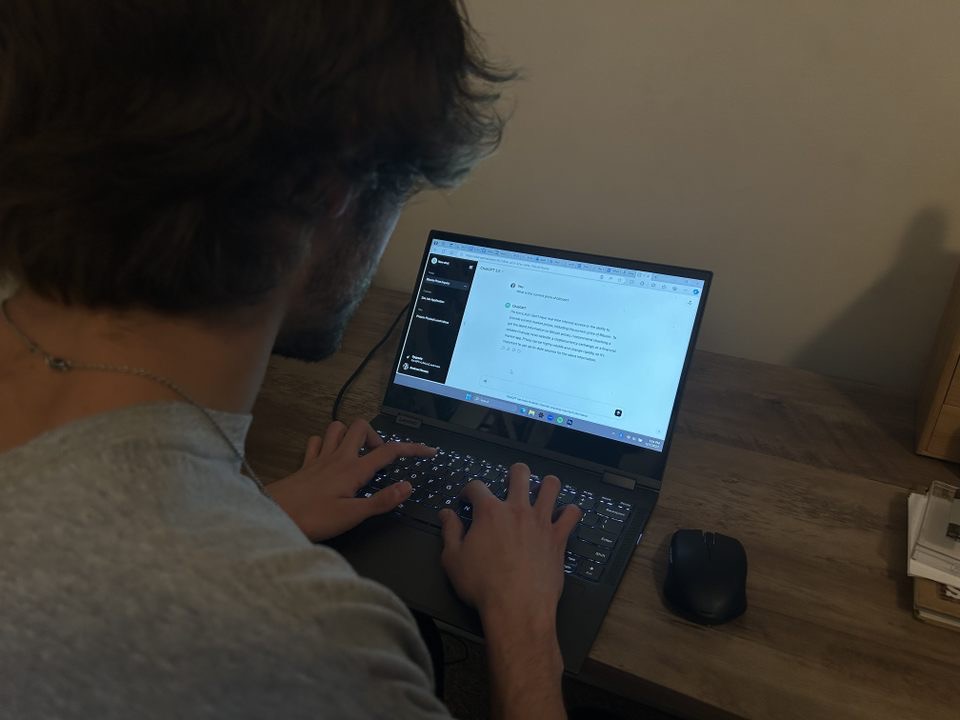

With the due date looming and unsure how to write in an academic style, Harvey, a Sociology major, logged onto the AI tool ChatGPT, got what she needed, and slotted it into a couple paragraphs in an otherwise original paper.

The PennWest California student has followed in the footsteps of so many college students and has opted to use artificial intelligence tools to help her complete her papers and assignments. If you ask her now, she will tell you that it was blatantly unfair of the professor to give her a zero, when other students do not turn anything in at all. According to Harvey, using AI in the way that she did should not be considered cheating.

“Some people just don’t know how to put essays together,” she said. “Sometimes you need that help. The professors ask for outrageous things too, so sometimes you think ‘why don’t I just use AI?’”

Harvey said that she plans on using AI in the future, as long as the professor in question is okay with it. In the meantime, Harvey wants universities like PennWest to solidify their AI policies on a wider scale, and professors should encourage the use of AI more.

“Technology is only getting better as time goes on, so how are you going to shut something out that’s public to everyone?” she said. “I feel like they should make it accustomed for us to use it.”

According to University Business, an educational magazine, only 3% of colleges and universities have adopted official policies regarding AI. PennWest California, and many others, do not have one, at least not yet. Former Interim Provost, Daniel Engstrom, put it bluntly.

“AI is not specifically called out in a policy,” he said. “It falls under the Academic Integrity policy.” PennWest’s AI policies are “under review”, according to Engstrom, but he is aware that some professors allow their students to use it as a resource.

For now, AI tools are expressly forbidden and are defined as cheating unless otherwise permitted. However, even PennWest’s own AI detection tools had to be disabled earlier this year due to outside pressure. TurnItIn, a anti-plagiarism service that PennWest uses, had its AI detection feature shut off after wider national concerns in the media about false-flagging innocent students.

The problem is not isolated at PennWest either. As a sophomore Pharmacy major at the University of Pittsburgh, Ryan Dubos says that he only has so much energy to give to the classes he cares about. AI helps to fill in the gaps.

“I totally plan on it [continuing to use AI]. Now, I’m not saying I’m going to use it for everything like calculus or physics or biochemistry,” he said, “but I will definitely use it for classes that I believe are a waste of time.”

Dubos said that students using AI on their respective campuses is a very common sight.

While some students are experimenting with the use of AI, administrations are sullenly dragging their feet in response, according to one university faculty member.

PennWest California English Professor M.G. Aune hopes that other schools will be proactive about this emerging problem, and that his university will follow suit once they are. However, the problem is already at his doorstep.

“I have caught students using it,” he said, “and depending on the assignment, sometimes I’ll ask them to rewrite it and sometimes I’ll give them a zero. It all depends for me, but the important thing is, you’re going to learn something by writing this paper. Since you used ChatGPT, you didn’t learn it. So go back and learn it. For other assignments, you had an opportunity to learn something and you chose not to, so I’m not going to give you another opportunity.”

Aune, starting at California University of Pennsylvania in 2007, has his own personal safeguards in place to protect against AI cheating, including re-writing his assignments, a new and widespread strategy by other professors.

Leah Chambers is the Department Chair of English, Philosophy, and Modern Languages at PennWest. She has an alternate and progressive stance on AI and its uses. The majority of the blame doesn’t lie in the students, she says, it lies in the professors.

“Instead of working to prevent the use of AI, we need to consider what it means for teaching, and how we can adapt,” she said. “There is a difference between assigning writing and teaching writing. If I give an assignment that a student can reasonably approximate using AI, then maybe I’ve given a bad assignment.”

Chambers said that utilizing AI is “hardly different” than using any other web tool to synthesize information. She emphasized that educators and administrations should “assess the process as much as the product.” If a professor’s assignment can be easily completed using ChatGPT, then the assignment needs to be adjusted. Some professors, like Chambers, believe that their assignments make cheating extremely difficult.

Dana Driscoll, Professor and Director of the Kathleen Jones White Writing Center at Indiana University of Pennsylvania, echoed Chambers’ comments on this newfound effort to combat AI- maybe it is not about the students cheating at all.

“Faculty can do a lot to develop assignments that encourage students to use AI in productive and ethical ways as opposed to doing the work for them,” she said, “So do students cheat and use AI? Yes, but well-designed assignments can often circumvent that.”

Still, just like the other online tools before it, AI can obviously be used to blatantly cheat on papers and assignments by way of plagiarism. PennWest has made it clear that their efforts should be to fight against that, but students seem split. While Hymes sees no issue in using it freely, others have reservations.

Daniel Kordich, a Business Major at PennWest California, had used AI for help with homework or come up with ideas for papers. Importantly, however, Kordich has never directly plagiarized an assignment using an AI tool. That, he says, is the difference.

“I don’t consider it cheating, unless you simply copy-paste what the AI gives you and turn it in,” he said. “I think it depends on the assignment. If it’s a test, it’s absolutely cheating. But if it’s homework or something that’s helping you learn the material, then I would say no.”

The line between cheating and simple assistance is blurred thanks to this new and emerging technology, and yet PennWest has decided that a simple take is the best one: “No use of electronic devices when not expressly permitted to do so”.

Other institutions in the area have taken steps and have pounced on the issue a bit more quickly. Seton Hill University has added onto their academic integrity policies. The use of AI-generated content without attribution is now officially listed as plagiarism, as of this academic year. However, it now goes one step further thanks to one faculty member.

Emily Wierszewski serves as the Chair of the English Department at SHU. She says that her department has taken some major leaps as AI has advanced, including an overhaul to academic integrity and first-year course policies.

“I’ve developed a policy specific to our first-year writing courses and we’ve had some discussions about how our field is responding to AI,” she said. “We also have a special section of our first-year writing digital textbook devoted to readings about AI and whether it should be part of the writing process.”

Wierszewski’s policy, written out and given to all first-year writing students through the syllabi, includes a rationale as to why a student passing work off as their own would be detrimental to their education.

“It is essential that you complete all of the assignments in this class yourself,” it says. “These skills cannot be developed if you rely on other people or entities (including artificial intelligence tools like ChatGPT) to do the difficult but important work of writing and thinking for you.”

Sean Madden, a PennWest California Professor in the History, Philosophy & Modern Language Department, had a similar take on the issue. Loud-spoken and gruff, his answer will not be found in any official course syllabus.

“All of my papers come in drafts so the author has the opportunity to explain their work,” he said, “I have a philosophy about cheating, a cliche almost. If you cheat, it is going to bite you and not me.”

Aune thinks that this issue will be dealt with like all the others: a small group of forward-thinking institutions will band together and form official policies, and then the other schools will join in.

“There’s going to be a group of colleges and universities that engage with it constructively and proactively,” he said, “And then there will be others that engage with it more reactively, seeing it as a problem and spending their time and energy trying to fix it rather than using it as a tool.”

Aune would add his perspective on his university later.

“As an institution, PennWest is being very reactive in my opinion,” he said, “That said, I have colleagues that are the opposite, but those are individual efforts. I don’t think that they’re particularly supported by the university.”